AI chatbots, from ChatGPT to Gemini and Claude, to name a few, have become extremely popular. They provide quick answers and break down complex concepts when used appropriately. Many of these tools also assist with decision-making and reasoning. AI chatbots are efficient and can supercharge your productivity. The best part is that you can use them on the go via your smartphone or handy Android tablet or at your desk through a web interface.

Because AI chatbots seem conversational and non-judgmental, divulging sensitive information without realizing it is easy. Whether you use these tools in your personal life or at work, sharing too much can lead to unintended negative consequences. While not everyone has the same privacy needs, it’s best not to reveal these data types to an AI bot.

Related

There’s no reason to enter your contact information, like your phone number, address, or email address, into an AI platform. Even if you think providing that information could help the chatbot share better answers, it’s best to avoid it.

While the company you share it with might not misuse your data, there’s a possibility a hacker could steal the information. Cybercriminals could use these details to send you phishing links or access your social media accounts. Keep your contact information private to maintain your security.

6

Login credentials

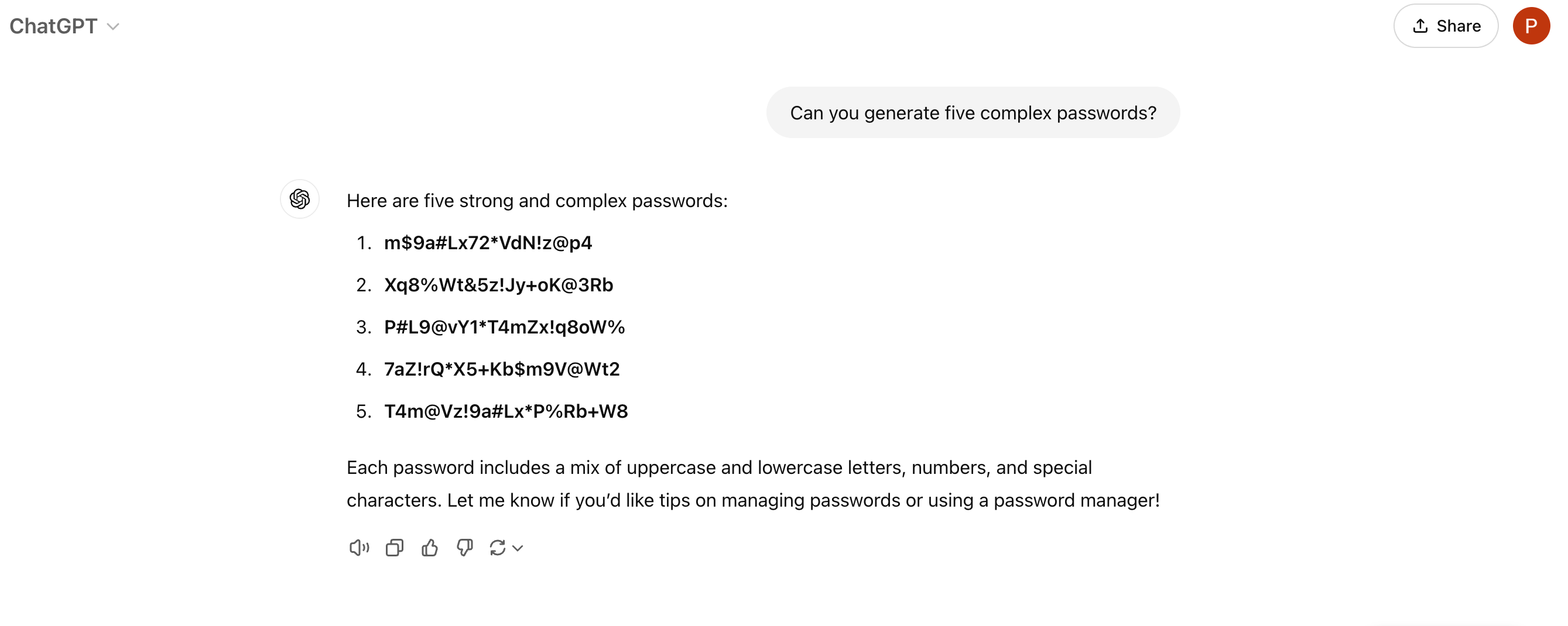

Another thing you don’t want to share with AI chatbots is your login information. Using AI bots to generate usernames and passwords or keep track of your credentials can put your online security at risk in a data breach.

If you’re looking for an effective alternative, password managers can store your passwords so that you don’t have to remember them. Many of these tools also generate new, complex passwords for your online accounts, meaning you won’t have to rely on an AI bot.

5

Personal Identifiable Information (PII)

You should never share Personal Identifiable Information (PII) with AI chatbots. This includes data that can help a third party identify you, like your Social Security Number, driver’s license, or passport details, to name a few.

Hackers can misuse such information in a data breach to commit identity theft or financial fraud. The consequences of such an incident can be long-lasting and might damage your finances.

Source: mockup.photos

Several organizations, even those building their own AI models, restrict the use of generative AI at work, and for good reason. Information you input into an AI platform can become part of training datasets. The chatbot might use that information in its responses to other users. This could have disastrous consequences.

Before you share work-related data with an AI chatbot, check your organization’s policies regarding the use of generative AI in the workplace. If you’re permitted to use AI, do so with caution. Using AI to fast-track your work projects can be tempting, but entering information into an AI model is not too different from publishing it online. You don’t know who might access it or use it.

3

Medical data

Many people turn to AI chatbots to brainstorm possible diagnoses, receive advice, and better understand their health. In recent times, people have also uploaded their X-rays and scans onto AI platforms to get a better interpretation of their results. However, just because you can doesn’t mean you should, especially regarding your health.

Medical data is subject to healthcare privacy laws like HIPAA in the US. This keeps third parties from accessing your data without your consent. Since consumer apps are not bound by HIPAA regulations, they don’t provide the same level of protection. If you don’t want your medical data used in a training dataset, keep the information to yourself.

Related

Google Gemini: Everything you need to know about Google’s next-gen multimodal AI

Google Gemini is here, with a whole new approach to multimodal AI

2

Intellectual property

It’s best not to share your intellectual property, whether it’s unpublished writing, artwork, or ideas, with AI. The problem isn’t so much that AI companies might use the information you share for profit. Instead, when your IP is on an online platform, other people can access it.

Whether through a data breach or the data becoming public via training, other parties might use your intellectual property for commercial or personal gain. Unless you’re comfortable losing control over your IP, keep it off AI platforms.

1

Financial details

Source: Markus Winkler/Unsplash

AI models can be useful in generating budgets, identifying where you can reduce expenses, and breaking down complex financial concepts. However, while these chatbots can help with general recommendations, don’t share your paystubs, investment details, bank account, or credit card details on any platform.

Although tech companies developing AI chatbots attempt to keep their platforms secure, vulnerabilities may exist. Hackers could exploit these holes to access your data and use it for nefarious reasons. A financial advisor is a safer option than an AI chatbot.

Using AI chatbots safely

You have limited control over the storage of the data you share via your prompts. Likewise, companies can change their policies and share your data with more vendors. Choosing reputable platforms like ChatGPT or Gemini mitigates some of these issues since they likely have secure systems and better privacy policies.

Still, it’s best to err on the side of caution and limit the information you share with any AI chatbot. Treat your prompts on AI platforms as you would the information you share on social media or blogs. Assume that people you don’t know might use the information in ways you didn’t intend.

If the platforms allow it, clear your chat histories and opt out of having your data used for training purposes to protect your privacy. Also, keep an eye on the privacy and data retention policies so that you know how the company uses the information you share.